If you have glanced at the headlines recently, you could be forgiven for thinking the drone hobby is coming back down to earth. Between sweeping restrictions in the United States and tighter registration rules in the UK, the carefree "wild west" years of flying are clearly behind us. Yet despite the extra admin, the sector itself is thriving. Recent reports put the global drone photography services market at close to the one‑billion‑dollar mark and growing at around 19–25 percent a year, which firmly positions aerial imagery as a serious commercial service rather than a weekend toy.

What Has Changed in the Rules?

The big question many pilots are asking is how the latest rules actually affect them. The answer depends heavily on where you live.

In the United States, the updated FCC "Covered List" is the main story. In December 2025, the FCC was effectively barred from granting new equipment authorisations to certain foreign‑made drones and components, including DJI products, which means newly designed foreign models cannot be approved for import, marketing or sale in the US unless they qualify for a specific waiver. Existing drones tell a different story: aircraft that already have FCC approval remain legal to purchase, own and fly, and retailers can still sell those earlier authorised models. That makes the situation more of a squeeze on future variety than an overnight flying ban.

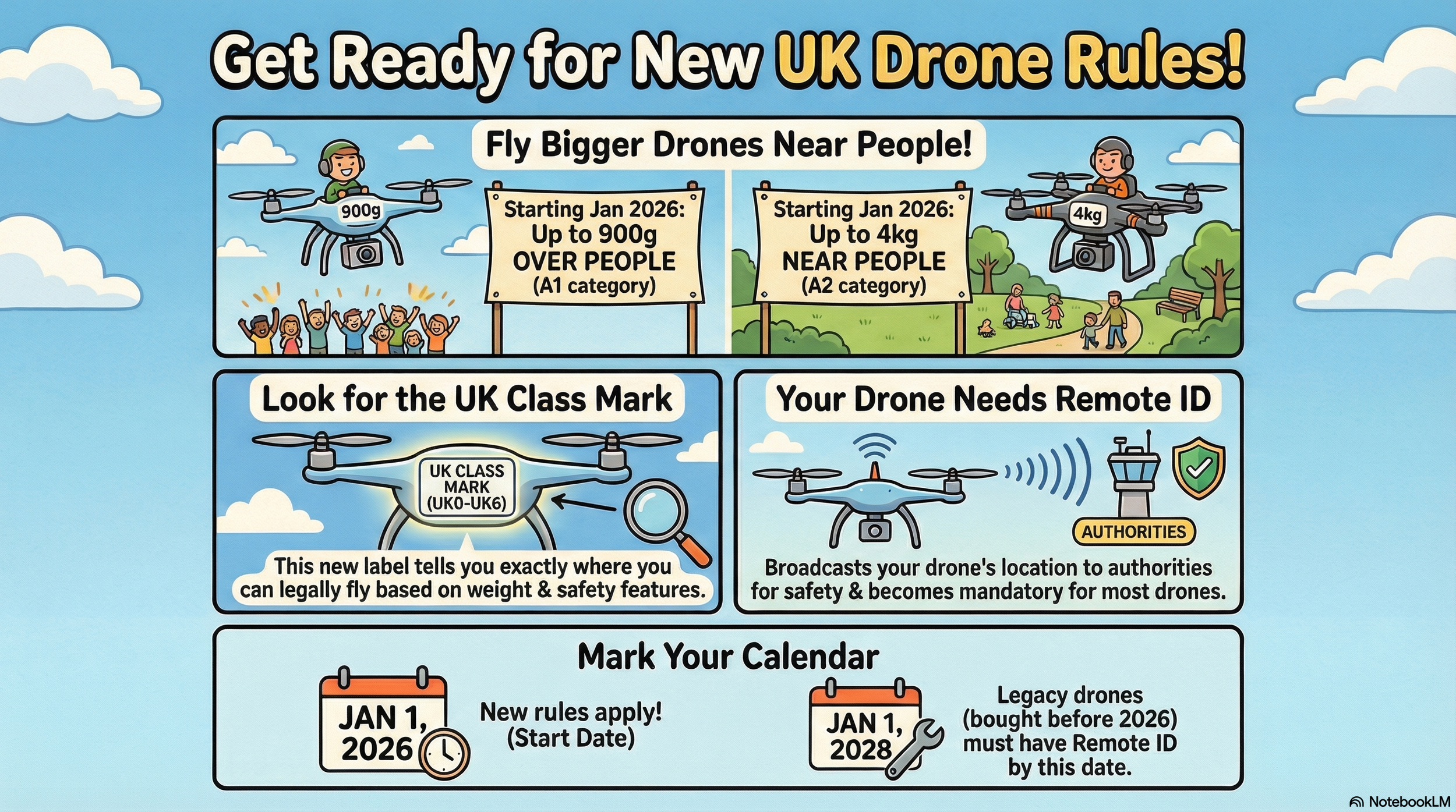

In the United Kingdom, the Civil Aviation Authority has confirmed a major shift in weight thresholds. From 1 January 2026, anyone flying a drone or model aircraft that weighs 100 grams or more must hold a Flyer ID, and if that drone has a camera (or weighs 250 grams or more), they also need an Operator ID. This is a big change from the previous 250 gram threshold for most registration, and it brings a large number of small "everyday" drones into the regulated category, especially popular mini camera drones.

Regulators are also getting tougher on bad behaviour. In the US, the FAA and other authorities have made clear they intend to take enforcement more seriously when flights put people at risk, and civil penalties for serious violations can run into the tens of thousands of dollars per incident. The message is straightforward: casual flying is still welcome, but reckless flying increasingly has real financial consequences.

The Rise of the Lightweight Drone

All of this has turned drone "weight‑watching" into a serious buying consideration. Many pilots are moving towards lighter aircraft to reduce friction with the rules while still getting strong image quality.

On the prosumer side, there is intense interest in compact models that squeeze larger‑than‑phone‑sized sensors into sub‑250 gram frames, offering high‑resolution video, good low‑light performance and multi‑directional obstacle avoidance in a bag‑friendly package. For beginners, the sweet spot tends to be affordable drones with strong safety features, such as built‑in propeller guards, simplified flight modes and easy hand launches, which make that first flight much less intimidating.

The regulatory pressure in the US has also opened the door wider for alternative brands. With new foreign‑made models facing an approval freeze, manufacturers that already have authorised aircraft in the market, or those operating outside the traditional big‑name ecosystem, are getting more attention, particularly when they can offer 3‑axis gimbals and 4K recording at a lower price. The result is a slow but noticeable diversification of the shelves, even as some pilots remain loyal to existing line‑ups.

Are People Actually Giving Up?

So with more paperwork and stricter enforcement, are hobbyists dumping their drones and walking away? The broader picture suggests the opposite.

Market research on drone services and drone photography shows steady growth through 2024 and 2025, with strong forecasts into the early 2030s, particularly in sectors like real estate, construction monitoring, inspections and media. That does not look like a hobby in decline. While there is certainly some regulatory fatigue in online communities, usage data and revenue projections point towards more flights, more paid work and more creative output … not less.

On the second‑hand market, much of the activity looks less like a mass exit and more like a "fleet refresh". Many pilots are selling older, heavier aircraft in favour of lighter, regulation‑friendly models that are easier to keep compliant under the 2026 rules in both the UK and US. It is a natural response: swap one or two bulky legacy drones for a compact, modern model that is simpler to register, carry and justify to clients.

What 2026 Really Means for Drone Photography

Drone photography has grown up. It has moved from being treated as a novelty to being recognised as a serious imaging tool that sits alongside your main camera kit. The entry barrier is undeniably higher than it was a few years ago, with registration requirements, Remote ID timelines and more stringent enforcement now part of the landscape. At the same time, the technology has never been better: smaller drones, better sensors, improved safety features and expanding commercial demand are all pulling the market upwards.

For bloggers, creators and photographers, the takeaway is simple. The sky is not closing. It is just becoming more organised. If you are willing to learn the rules, pick the right aircraft and fly responsibly, drone photography in 2026 is still very much on the way up.