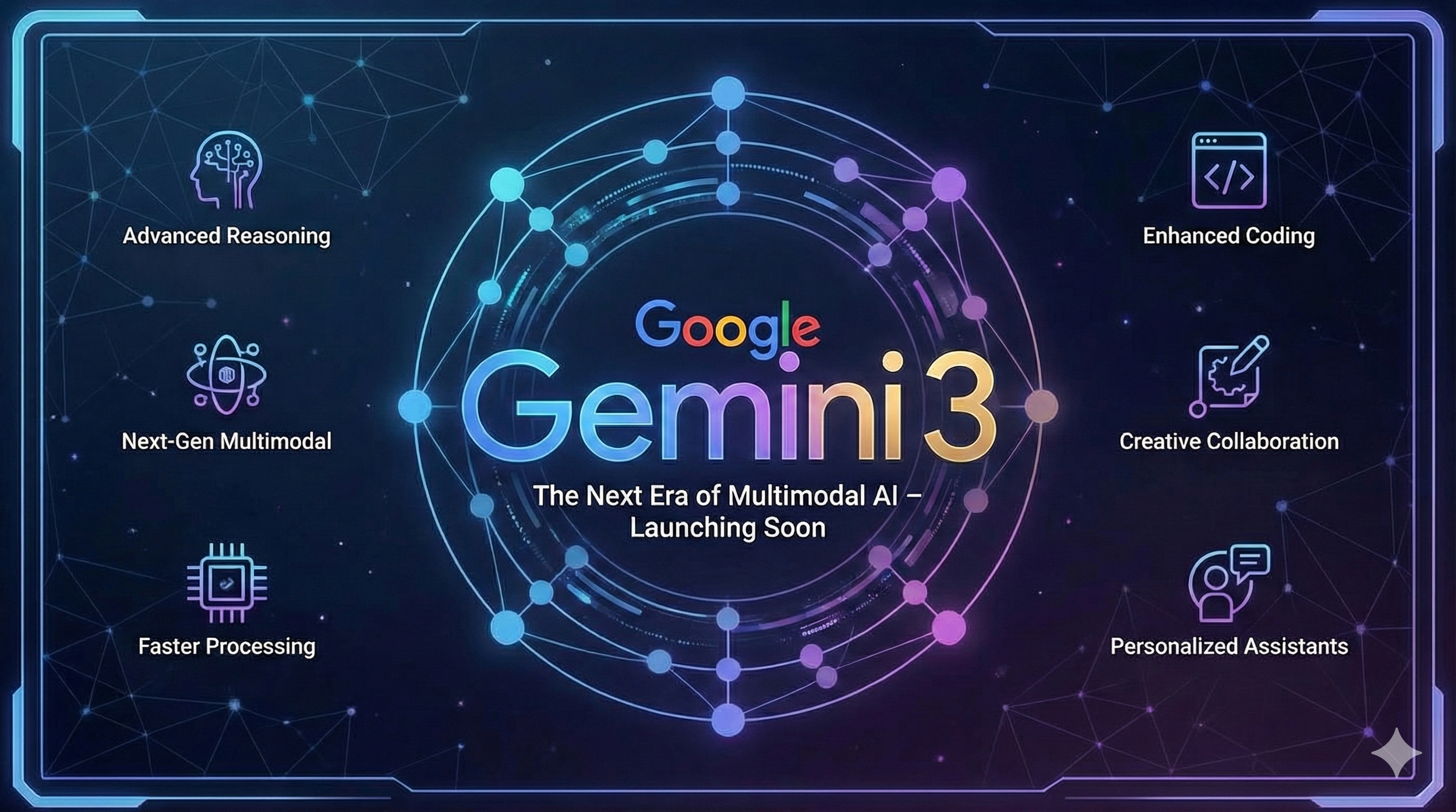

Insight 1: From Assistant to Thinking Partner

The biggest change in Gemini 3 isn't just improved performance. It's a deeper level of understanding that transforms how we interact with AI. Google designed the model to "grasp depth and nuance" so it can "peel apart the overlapping layers of a difficult problem."

This creates a noticeably different experience. Google says Gemini 3 "trades cliché and flattery for genuine insight, telling you what you need to hear, not just what you want to hear." This represents an important evolution in how we work with AI. Instead of a simple tool that answers questions, it becomes a real collaborative partner for tackling complex challenges and working through difficult problems.

This new relationship demands more from us as users. When your main tool acts like a critical colleague rather than an obedient helper, you need to step up your own thinking and collaboration skills to get the most out of it.

Google CEO Sundar Pichai put it this way:

It's amazing to think that in just two years, AI has evolved from simply reading text and images to reading the room.

Insight 2: Deep Think Mode Brings Specialized Reasoning

Google introduced Gemini 3 Deep Think mode with this launch. This enhanced reasoning mode is specifically designed to handle "even more complex problems." The name isn't just marketing. It's backed by real performance improvements on some of the industry's toughest tests.

In testing, Deep Think surpasses the already powerful Gemini 3 Pro on challenging benchmarks. On "Humanity's Last Exam," it achieved 41.0% (without tools), compared to Gemini 3 Pro's 37.5%. On "GPQA Diamond," it reached 93.8%, beating Gemini 3 Pro's 91.9%.

This matters because it shows a future where AI isn't a single, universal intelligence. Instead, we're seeing the development of specialized "modes" for different thinking tasks. This isn't just about raw power. It's a strategic approach to computational efficiency, using the right amount of processing for each specific task. This is crucial for making AI sustainable as it scales up.

Insight 3: Antigravity Changes How Developers Build Software

Perhaps the most forward-thinking announcement was Google Antigravity, a new "agentic development platform." This represents a fundamental change in how developers work with AI, aiming to "transform AI assistance from a tool in a developer's toolkit into an active partner."

What makes Antigravity revolutionary is what it can actually do. Its AI agents have "direct access to the editor, terminal and browser," letting them "autonomously plan and execute complex, end-to-end software tasks." The potential impact is huge. Going far beyond simple code suggestions, it completely redefines the developer's role. Instead of writing every line of code, developers become directors of AI agents that can build, test, and validate entire applications independently.

Insight 4: AI Agents Can Now Handle Long-Term Tasks

A major challenge for AI has always been "long-horizon planning." This means executing complex, multi-step tasks over extended periods without losing focus or getting confused. Gemini 3 shows a real breakthrough here.

The model demonstrated its abilities on "Vending-Bench 2," where it managed a simulated vending machine business for a "full simulated year of operation without drifting off task." This capability translates directly to practical, everyday uses like "booking local services or organizing your inbox."

This new reliability over long sequences of actions is the critical piece that could finally deliver on the promise of truly autonomous AI. It marks AI's evolution from a "single-task tool" you use (like a calculator) to a "persistent process manager" you direct (like an executive assistant who handles your projects for months at a time).

Looking Ahead: A New Era of AI Interaction

These aren't isolated features. They're the building blocks for the next generation of AI. The main themes from the Gemini 3 launch (collaborative partnership, specialized reasoning, agent-first development, and long-term reliability) all point toward a future that goes beyond simple prompts and responses.

The focus has clearly shifted from basic question-and-answer interactions to integrated, autonomous systems built for real complexity. As AI moves from a tool we command to a partner we collaborate with, we'll need to adapt how we think, work, and create alongside it.